Heirarchy of Intelligence

Heirarchy of Intelligence

The Hierarchy of Intelligence

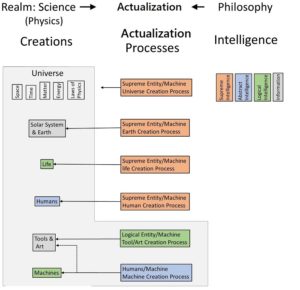

Humans have the ability to design and build (create) machines in the macro world that do incredible things. But humans cannot create life as we know it – our fingers are too big and we do not have the intelligence to design something that is as “smart” as we are. Obviously this is an opinion of this engineer and is not shared by everyone. But if this limitation is true, then the hierarchy is shown in Table 1, but more clearly in Figure 3, exists.

Figure 3 can be opened up in a separate window by clicking on it. Each box represents an entity. Each column represents different forms of entities. The left column lists the actualizations that occur in our universe – the physical reality that we experience. The Universe entity box surrounds the header title to indicate that the actualizations are in the realm of the Universe and follow the laws of physics of the Universe. It wraps around the human actualization processes because those actualizations occur in our universe.

We do not know where or how the “supreme actuator” actuated, so it is not shown as being “in our universe” which may or may not represent reality.

Figure 3 also assumes the “supreme creator” created the universe and solar system. If one does not have that point of view, that the universe and solar system exist from natural causes, then remove the Universe and Earth creation boxes. They have no bearing on the discussion herein and are not mentioned elsewhere on this site. However, since we know from the proof above that life cannot be accounted for by natural causes, the create life and human boxes must remain unless some other explanation can be made for our existence other than a superior intelligent entity.

The three realms listed are Science (physics), Actualization (Actualization Processes), and Philosophy (intelligence/logic). We already know about Science and Philosophy, Actualization is new. It perhaps should not be considered a separate realm, rather a combination of Science and Philosophy, hence the arrows illustrating this connection. It exists as an artifact because of the fact that intelligent manipulation of matter and energy makes it possible to create a whole new class of creations – all the kinds of entities that life, especially man, creates, but most important, life itself.

The realm of philosophy, in the form of information, logical intelligence, abstract intelligence, and supreme intelligence are inherent by the presence of these properties in the creators and the creations. For this reason, color codes are used to signify levels of intelligence. This shows that it took a supreme intelligence to create life and life with abstract intelligence, and it takes at least abstract intelligence to create entities with logical intelligence or with embedded information.

Another central theme is that all actualizations are actualized by an entity that uses machines doing intelligent work to execute a process designed by the actualizer. The term actualizer is used instead of the designer because to an engineer, the design process is just one task of many to create a product.

It is the view of this engineer that the term “design” by those in the field of biology covers all aspects of actualization (the process of bringing something into existence). The design portion of creation is a mental process, which is not in the realm of physics, rather the realm of philosophy. The actual building of the “something” is physical, is in the realm of physics. Actualization is a process that merges the realms of philosophy and physics. This is a fundamental understanding that is missing from the arguments put forward by both the Materialists and the Intelligent Design community.

From Intelligence to Actualized Entities

Philosophers have forever recognized the difference between living and nonliving things. Living things contain much more detail and do many more things than natural causes are able to do. However, today we are being taught that science can explain everything, there is no need to invoke a creator to explain the existence of life. This prospect can be disproved if one can show scientifically that natural causes cannot produce (as opposed to design) the molecules we find in our life.1 This is the approach taken here – an engineer’s way of thinking about the problem. An engineer finds out if his mental design, and the science behind it, are valid by the success or failure of converting a mental design to physical reality.

The Thermodynamic Impact of Intelligence

From a thermodynamic point of view, the addition of intelligence with physics has an additional energy cost, above all other thermodynamic considerations, just to be able to do intelligent work. This expenditure exists as long the machines that are doing intelligent work are “running”. The amount of energy actually consumed is a function of the design of the machine, how long it running, and how hard it is working. There is no way to be able to relate entropy change due to intelligent work or to the amount of energy consumed.

In addition to the increased quantity of energy required, energy of a potential higher than the free energy of the system is required, for two reasons, one to do the intelligent work (like move something uphill) and two, to perform the logical functionality of the machine.

There is an energy cost related to the realm of Actualization, and it should be somehow stated as a law that relates to the realms of physics and philosophy. And perhaps there should be a term designated for the ongoing energy required to supply intelligence for an intelligent machine.

It Starts With Intelligence

The term intelligence is used differently by different people and professions. Most people use the term as associated with humans – the ability to think, comprehend, analyze. Engineers also use the term to indicate the ability to process information, like a computer program. The military uses the term for information that has military value. It is important to understand that intelligence in all forms is not a physical entity, it is an ability to process information. For purposes of this discussion, we need to consider different levels of intelligence and propose the terminology described below.

Information

Information is not really a level of intelligence, rather it is a product of intelligence. It is static, non-material/energy/space/time abstraction that is the product of an intelligent entity, not science/physics. However, information can be embedded into matter based on designed languages and/or protocols and/or codes and/or standards. Examples include the information carried by books, tapes, CD’s, computer memory, handwriting, and DNA. These are examples where information is embedded in matter for the purpose of storing the information for some later use by an intelligent device, such as a computer, a TV set, an audio player, and in the case of DNA, the information needed to run the cell’s life process. Information of this sort can only be created by an abstract or supreme intelligent entity.

But information can also be embedded in matter to provide function other than to store the information. It can be manipulated to form art or to be a tool. Examples are paintings, sculptures, jewelry, hammer, nails, pliers, windows, passive electronics parts, birds nest, and beehive. Items that fall into this category of actualizations can be actualized by entities that have an abstract or logical level of intelligence as illustrated in Figure 1.

Logical Intelligence

Logical intelligence is the ability to make decisions based on conditions or circumstances. The simplest form is a binary switch. An example would be a switch that is off when there is sufficient light and turns on when there is insufficient light. A logical device must have means to receive an informational input signal, in this case, a light sensor, and an output, in this case, a light bulb and power source. A characteristic of any logical device is that it requires energy to perform the logical function – it takes energy to operate the light sensor and to flip the light switch in this example.

All machines have some sort of embedded logic. Think of an engine. The logical functionality is the result of the shape of the crankshaft and camshaft and valves, the timing of the ignition spark all coordinate the component parts to be in the proper position to make the engine work. But it requires an Abstract intelligence to design the engine as shown in Figure 3.

Abstract Intelligence

Abstraction is the ability to think, invent, to deal with ideas. This capability goes beyond the logical processing of information. It is a capability that is unique to humans among life on earth. Even though humans have this capability, it is the opinion of this engineer that we will not be able to duplicate this capability in machines of our design unless we can “reverse engineer” this capability in ourselves.

© 2016 Mike Van Schoiack

“About 7 percent of the weight of living matter is composed of inorganic ions and small molecules such as nucleotides (the building blocks of DNA and RNA), amino acids (the building blocks of proteins), and sugars. All these small molecules can be chemically synthesized in the laboratory. The principal cellular macro-molecules – DNA, RNA and protein – comprise the remainder of living matter. Like the small molecules found in cells, these very large molecules follow the general rules of chemistry and can be chemically synthesized.” Then further down the paragraph, it says this:

“About 7 percent of the weight of living matter is composed of inorganic ions and small molecules such as nucleotides (the building blocks of DNA and RNA), amino acids (the building blocks of proteins), and sugars. All these small molecules can be chemically synthesized in the laboratory. The principal cellular macro-molecules – DNA, RNA and protein – comprise the remainder of living matter. Like the small molecules found in cells, these very large molecules follow the general rules of chemistry and can be chemically synthesized.” Then further down the paragraph, it says this: