Factory

To design and build something like a factory requires many co-ordinated steps. It is first a “top-down” effort starting with objectives, and resources of all types. A plan must be made that figures out how to utilize the available resources to put together the pieces that will make the plant work. The details of how the plant will perform each process step that will be needed, and how all of the steps can be coordinated to make sure they will work together. Then you have to go the bottom, and design the parts, test them, assemble multiple parts, make sure they work together, fixing problems as they arise, and work back upwards until the whole plant is assembled and tested.

As part of the design process, the process of moving the materials required in the overall process must be designed and built and installed.

When the plant is all together, the process must be started. In order to do it sucessfully, one must preload materials, place all machines in a particular state, or metaphorically speaking, set all switches in the right position and prime all the pumps, before hitting the “start” button that applies power to everything.

, the means of doing this has to be designed and in place. that have to move in a precise, coordinated fashion that build But this is but a small fraction of the tasks that must be performed.

Concepts to be added: Life is a process that uses intelligent machines to enable a living entity. The need to recognize, base on the fact that life is a process, the functionalty that has to exist., and the detail involved. It is far greater than presently acknoledged by thosese writing about life. The “process” elemdnets involve all of the detail.= functions that have to be performed to allow life to exist. This is far more involved thanthe general categories such as “reproductionn”. Reproduction invoves dozens, maybee hundreds or thousands of actual functional steps t.

ver since Darwin’s book Origin of Species was published in 1859, there has been a steady advance toward the idea that our existence can be explained by science – there is no need to invoke a God or other super-natural entity. Darwin’s theory seemingly provided an explanation of how all life forms evolved from the first cell, and, by extension, assumes a natural cause explanation of the origin of life.

Since Darwin wrote his book, much has been learned about life. It is much more complicated than expected. We now know that organisms have what appears to be a designed mechanism for evolution, one which can account for adaptation of plant and animal forms, but not the evolution of new forms. And there is no progress in the development of a theory for beginning life occurring from natural causes.

Studying the inter workings of the cell is science, and the knowledge gained makes the argument for Neo-Darwinism weaker by the day. The reality is that if Darwin knew what we know today about life and the record of life, and if he was honest, he would not have written his book based upon the doubts he expressed, and the methods of science that he expounded. Even so, the mainstream field of biology clings to the belief in Darwin’s theory and to the idea that life began by natural causes.

So today there is a huge chasm in the field of biology – Darwinist vs. those who embrace the evidence of what is being learned.

This engineer has become interested in this field partly because of the natural need to know how and why we exist, and partly because of what seems to be a denial, or maybe it is purely lack of knowledge, of the field of chemistry to see the difference between chemical reactions vs. the physical building of macro-molecules.

A probable explanation of this chasm and how it has evolved is that biologists early on did not realize that actual molecular machinery was involved in the cell. Once they did, they assumed that these machines could be described as an advanced catalyst, hence the term enzyme with the definition of it being an enzyme. They were not, and, it seems, for the most part, not aware of the fact that they are dealing with a whole different paradigm that involves machinery sensors, actuators and process control systems in addition to the chemistry which they are trained in.

It is easy, as an engineer, to look at this situation and recognize what seem to be pitfalls, but limited the knowledge in biology and chemistry leaves many questions unanswered and leads to the possibility that conclusions drawn may be wrong.

Two Paradigms

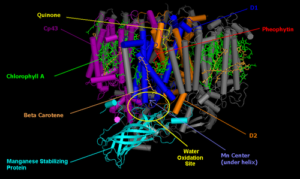

These are two separate paradigms. Chemical reactions are due to natural causes driven by the laws of physics and chemistry. The physical building of macro-molecules is the work of intelligent machines working in a process control system, intelligently using the laws of phycis to accomplish things that natural causes cannot. Life uses both of them to exist. The world of biology still seems to think that enzymes are just catalysts that speed up a natural chemical reaction.

Life is a Process

From this engineer’s perspective, the fact that life is an intelligently controlled process is the easiest way to appreciate and understand the difference between living and non-living matter; that life is matter with embedded intelligence.

The field of electronics deals with devices in the nm range, which is the same size range of proteins. But there are several differences between ultra-small electronic entities compared to life molecules. Electronic chips are layered two dimensional static devices, compared to life molecules that, in the living condition, moving in all three dimensions. The other big difference is that even though the electronic structures at these dimensions are hard to see, the designs normally supply test points that allow the engineer to discern what is happening even at these small dimensions by electrical measurements. The point is that we do not have the means to track exactly what is happening inside the cell because “our fingers are too large” and we cannot see or probe inside the cell walls to “reverse engineer” the life process that is in motion.

Past, Present, Future

However, the bio-medical field is doing amazing things involving manipulating of the genome. This engineer is clueless how these feats are accomplished. The assumption is that researchers are learning how to use the cellular machinery to determine functionality indirectly. This is a scary because it is obvious that the overall understanding of the process control system in the cell is very limited. In addition, the lack of knowledge of process control in general leads to the prospect of not appreciating the probable interrelationships between the numerous control loops that must exist in the cell. This could lead to unintended consequences, some which could be very subtle and/or dangerous.

It has been intuitively obvious to man since the earliest recorded history that life was something much different from inanimate nature. Darwinism has given those who do not want to believe that an intelligent entity was responsible for our existence a way, as Dawkins put it “to be intellectually fulfilled.” To others, life itself, is proof that there must have been some intelligent intervention with nature in order to create life.

The reality is that we do not understand the totality of physics; like dark matter and energy, gravity and entanglement. Perhaps if we did, we would know which side of this chasm is correct. The science we do understand leaves Darwinism in a black hole.

Frustrations of This Engineer

Lack of appreciation of the impacts of the 2nd law of thermodynamics:Reason that natural causes cannot build all of the molecules of life

Reason why there is no pathway for complexity to increase over time to “evolve” first life

The reason that life has to be a process

Terminology used in Biology & lack of precision of definitions

Understanding the full implications of life being a process

Appreciation of the difference between information and intelligence

Appreciation of the difference between design and actual building something

Appreciation of the difference between static things and machines that do work.

Purpose of This (ID) Site

This site will be a forum to discuss the frustrations listed above. It is the hope that there are others, particularly thoughtful engineers who share some of these frustrations and would like to have the ability to have discourse on these and similar topics will find this site.

It seems obvious that the fields of biology and engineering are merging, and it is exciting to be able to participate in this merger. If this engineer was a young man, he would become a bio-engineer.

This engineer, being very interested in understanding what is known about life; what it is and how it works, has spent countless hours reading and thinking about the topic. The engineer’s perspective is quite different from the Darwinist point of view and the differences have many implications: thermodynamic, theoretical, philosophic, political, and theological.

It is the belief of this engineer that the approximately 97% of the DNA that is not used to express proteins is essentially the life process “code”; that chucks of this DNA are converted to RNA that works with proteins to provide the functionality needed for the life process. This assumption is made based on what seems to be the obvious reality that life is a process, and knowing what is require to make a process work, coupled with the knowledge that the protein/RNA combination is already known to provide some of the functionality of the life process, e.g., the ribosome.

The key to understand life, it seems to this engineer, is to understand the details of how this process works. As mentioned, this is hard because our fingers and eyes are too big. Engineers like myself have ideas about what to be looking for including some thoughts regarding where and how. But we (speaking for myself) have only a little knowledge of what currently is known, and almost zero knowledge about the tools available to gain additional knowledge.

So the purpose of this site is to serve as a forum for this engineer and others to share ideas, learning resources and, in general, be a place to kindle thinking on the topic.

To submit a post, please use the Contact form in the footer.

It was pointed out in the “Fake Biology” post that the world of biology defines an enzyme to be a protein catalyst, and a catalyst to be a molecule that “speeds up a chemical reaction.

It was pointed out in the “Fake Biology” post that the world of biology defines an enzyme to be a protein catalyst, and a catalyst to be a molecule that “speeds up a chemical reaction.